- Using PVAAS for a Purpose

- Key Concepts

- PEERS

- About PEERS

- Understanding the PEERS pages

- Evaluation List

- Evaluation Summary

- Evaluation Forms

- Add Educator

- Add Evaluator

- Manage Access

- Add a school-level Educator to PEERS

- Add a district-level Educator to PEERS

- Add the Evaluator permission to a user's account

- Remove the Evaluator permission from a district user's account

- Add the Evaluator or Administrative Evaluator permission to a district user's account

- Remove the Administrative Evaluator permission from a district user's account

- Remove an Educator from PEERS

- Restore a removed Educator

- Assign an Educator to a district-level Evaluator

- Assign an Educator to an Evaluator

- Unassign an Educator from an Evaluator

- Assign an Educator to a school

- Unassign an Educator from a school

- Link a PVAAS account to an Educator

- Working with Evaluations

- Switch between Educator and Evaluator

- View an evaluation

- Use filters to display only certain evaluations

- Print the Summary section of an evaluation

- Understanding evaluation statuses

- Determine whether other evaluators have access to an evaluation

- Lock or unlock an evaluation

- Save your changes

- Mark an evaluation as Ready for Conference

- Release one or more evaluations

- Download data from released evaluations to XLSX

- Make changes to an evaluation marked Ready for Conference

- Reports

- School Reports

- LEA/District Reports

- Teacher Reports

- Comparison Reports

- Human Capital Retention Dashboard

- Roster Verification (RV)

- Getting Started

- All Actions by Role

- All Actions for Teachers

- All Actions for School Administrators or Roster Approvers

- Manage teachers' access to RV

- Assign other school users the Roster Approver permission

- View a teacher's rosters

- Take control of a teacher's rosters

- Add and remove rosters for a teacher

- Copy a roster

- Apply a percentage of instructional time to every student on a roster

- Batch print overclaimed and underclaimed students

- Remove students from a roster

- Add a student to a roster

- Return a teacher's rosters to the teacher

- Approve a teacher's rosters

- Submit your school's rosters to the district

- All Actions for district admin or district roster approvers

- Assign other LEA/district users the Roster Approver permission

- Take control of a school's rosters

- View a teacher's rosters

- View the history of a teacher's rosters

- Edit a teacher's rosters

- Add and remove rosters for a teacher

- Copy a roster

- Apply a percentage of instructional time to every student on a roster

- Batch print overclaimed and underclaimed students

- Return a school's rosters to the school

- Approve rosters that you have verified

- Submit your district's rosters

- Understanding the RV Pages

- Viewing the History of Actions on Rosters

- Additional Resources

- Admin Help

- General Help

Diagnostic

Interpreting the Data

The purpose of the Diagnostic reports is to help teachers assess the growth of students at different achievement levels. These reports can help teachers and admins set priorities for improving the differentiation of instruction to meet the needs of students at all achievement levels.

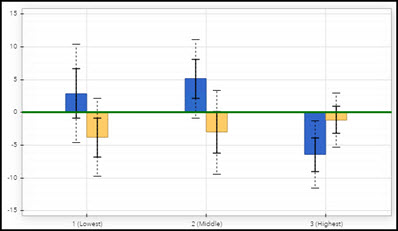

When the Diagnostic report opens, the data is displayed in a bar chart.

In this chart, the blue bars represent the growth of students in the most recent year. If you have data from the previous year, you'll see gold bars on the chart, as well. The gold bars represent the growth of students that this teacher had instructional responsibility for in the same subject or grade in the previous year. (The teacher has instructional responsibility for different students each year. Therefore, these are not the same students represented by the blue bar.) The green line represents the growth standard. |  |

Each bar has solid and dotted black whiskers. The solid whiskers mark one standard error above and below the growth measure, and the dotted whiskers mark two standard errors above and below the growth measure. It's important to consider the standard error as you interpret the growth measures represented by the bars on the chart. Consider the overall pattern of the bars rather than focusing on any individual value. However, it can be helpful to keep these guidelines in mind.

| A bar that is at least one standard error above the line suggests that the group's average achievement level increased. If the bar is at least two standard errors above the line, the evidence of growth is even stronger. |  |

| Likewise, if the bar is at least one standard error below the green line, the group likely lost ground academically, on average. If the bar is at least two standard errors below the line, the evidence is stronger. |  |

| Regardless of whether the bar is above or below the green line, if it is within one standard error of the line, the evidence suggests the group's average achievement did not increase or decrease. |  |

Keep in mind that the percentage of instructional responsibility is factored only into the analysis that generates the growth measures on the value-added reports. In contrast, the percentage of instructional responsibility is not included in the calculation of the growth measures in the diagnostic reports. In the diagnostic reports, the growth measure for each group reflects the influence of all teachers who had instructional responsibility in the selected grade and subject or Keystone content area.

It can also be helpful to view the pie chart.

The size of each pie slice represents the percentage of students who were in each of the achievement groups. Students are placed into these groups based on where their achievement level in this subject falls in the reference group distribution, so a teacher's students might not be evenly distributed across the three groups. Teachers who serve a lower-achieving population will see more students in achievement group 1, whereas teachers with a large number of high-achievers will see more students in achievement group 3. It's possible for students at all achievement levels to demonstrate growth.

The slices of the pie are color-coded to indicate whether the group's average achievement level increased, decreased, or remained about the same compared to the growth standard.

Color Indicator | Growth Measure Compared to the Growth Standard | Interpretation |

|---|---|---|

Light Blue | At least one standard error above | Moderate evidence that the group exceeded the growth standard. |

Green | Between one standard error above and one standard error below | Evidence that the group met the growth standard. |

Yellow | More than one standard error below | Moderate evidence that the group did not meet the growth standard. |

White | N/A | The group did not have enough students to generate a growth measure. |

As you reflect on these reports, you might want to ask yourself these questions:

- Did each group make enough growth to at least meet the growth standard?

- Is there a difference in the amount of growth the groups made? For example, did the group of high-achievers make significantly more growth than the low-achievers?

- If there is a difference in the amount of growth across achievement groups, what factors might have contributed to the differences?

- How can this information inform instructional practices, strategies, and programs?